Parquet Floor Room Decor 30 36 2 Parquet files are most commonly compressed with the Snappy compression algorithm Snappy compressed files are splittable and quick to inflate Big data

Parquet is a Row Columnar file format well suited for querying large amounts of data in quick time As you said above writing data to Parquet from Spark is pretty easy Also The vectorized Parquet reader enables native record level filtering using push down filters improving memory locality and cache utilization If you disable the vectorized

Parquet Floor Room Decor

Parquet Floor Room Decor

https://www.freebeerandhotwings.com/wp-content/uploads/2023/08/ftd-imgs-44-1.jpg

Halo Salt Room Wellness Spa

https://halosaltroomlex.com/wp-content/uploads/2022/03/salt-logo1.png

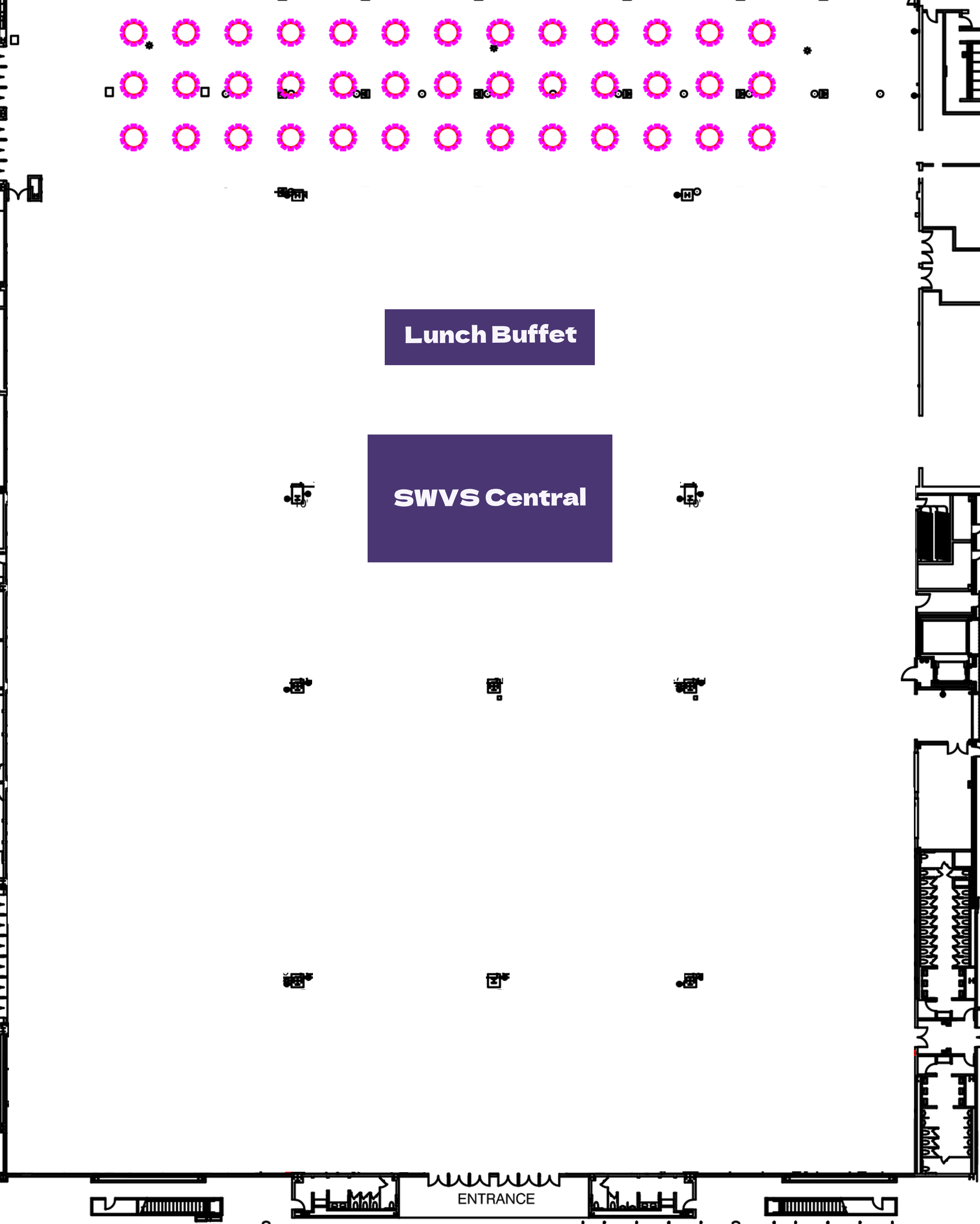

2024 SWVS Website Floor Plan

https://www.eventscribe.com/upload/planner/floorplans/Updated - Mixed_2x_8717 2_72.png

How to read a modestly sized Parquet data set into an in memory Pandas DataFrame without setting up a cluster computing infrastructure such as Hadoop or Spark This is only a 97 What is Apache Parquet Apache Parquet is a binary file format that stores data in a columnar fashion Data inside a Parquet file is similar to an RDBMS style table where

So BhanunagasaiVamsi have reviewed your answer however because you may have thought that I was working with a Parquet file your suggestion doesn relate This is The reason being that pandas use pyarrow or fastparquet parquet engines to process parquet file and pyarrow has no support for reading file partially or reading file by

More picture related to Parquet Floor Room Decor

Parquet Wood Effect Porcelain Floor Tiles Give A Fabulous Finish To

https://i.pinimg.com/originals/2a/5d/34/2a5d34326fb8fa43e584fa7405be5f5c.jpg

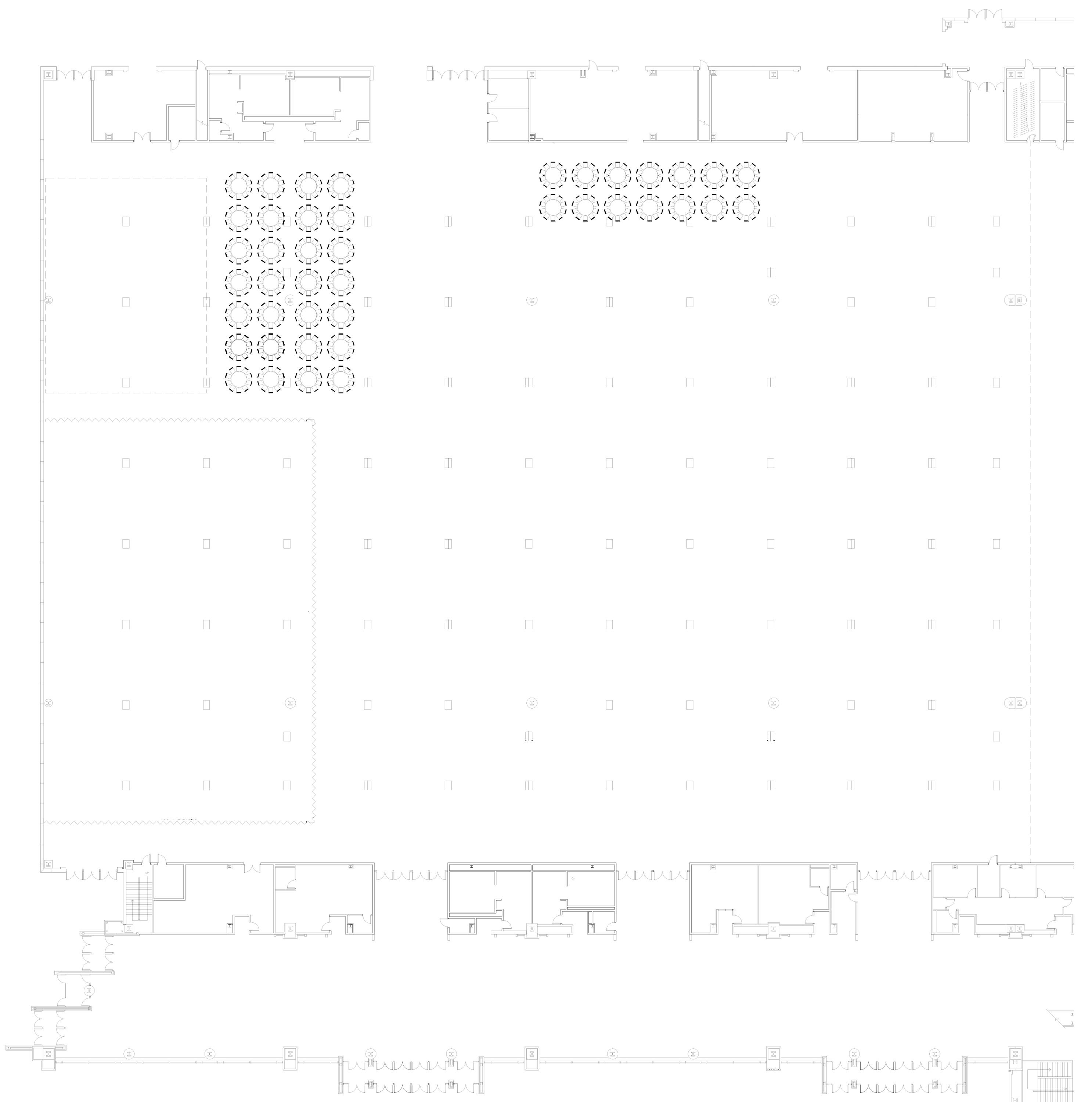

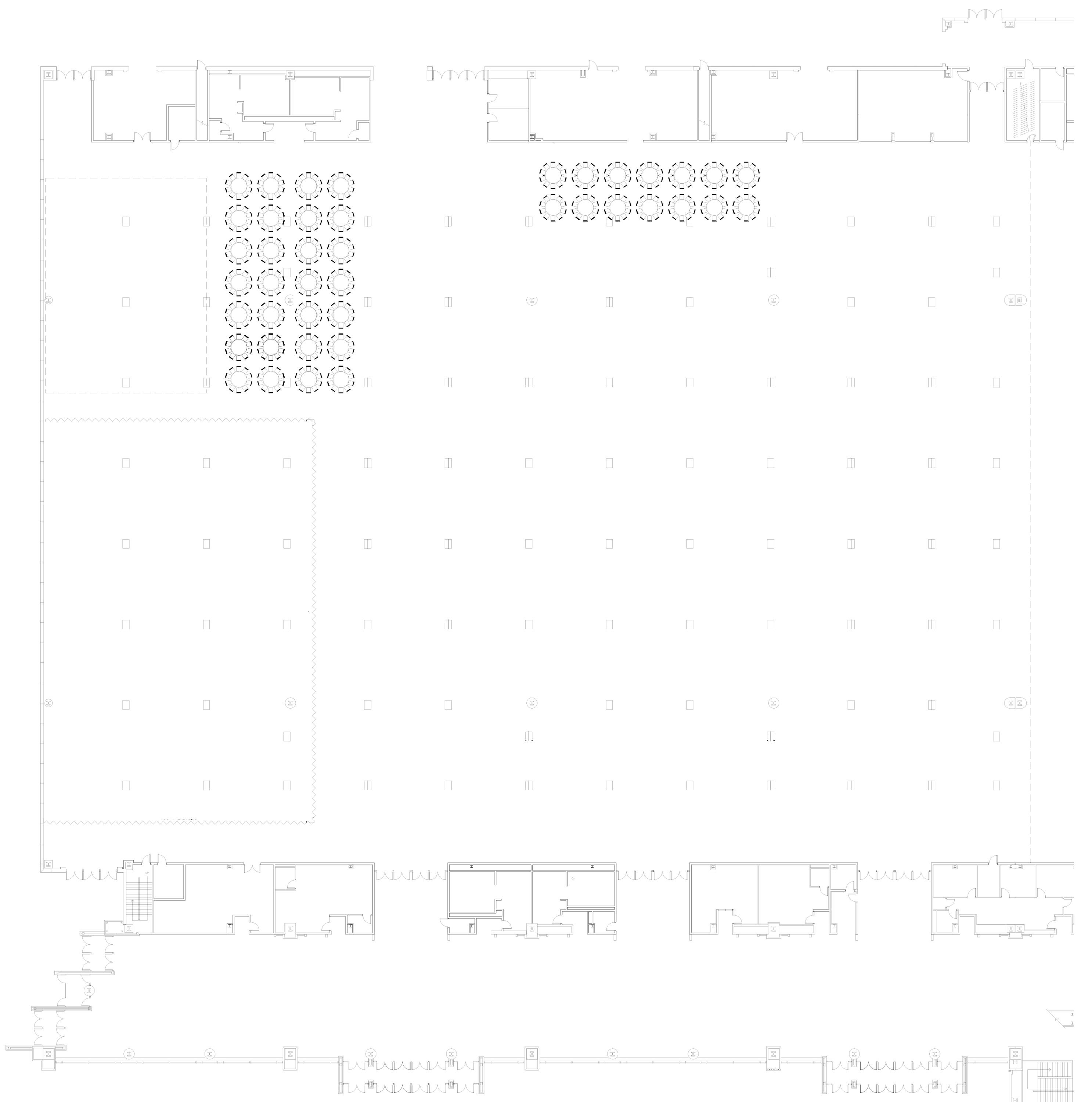

AJA Expo 2024 Floor Plan

https://www.eventscribe.com/upload/planner/floorplans/2024-Annual-Pretty-2x_81.png

ZIZIAFRIQUE FOUNDATION KENYA PAL Network

https://palnetwork.org/wp-content/uploads/2021/11/016-TZ-1-1071x1071.jpg

Is it possible to save a pandas data frame directly to a parquet file If not what would be the suggested process The aim is to be able to send the parquet file to another I need to read these parquet files starting from file1 in order and write it to a singe csv file After writing contents of file1 file2 contents should be appended to same csv without

[desc-10] [desc-11]

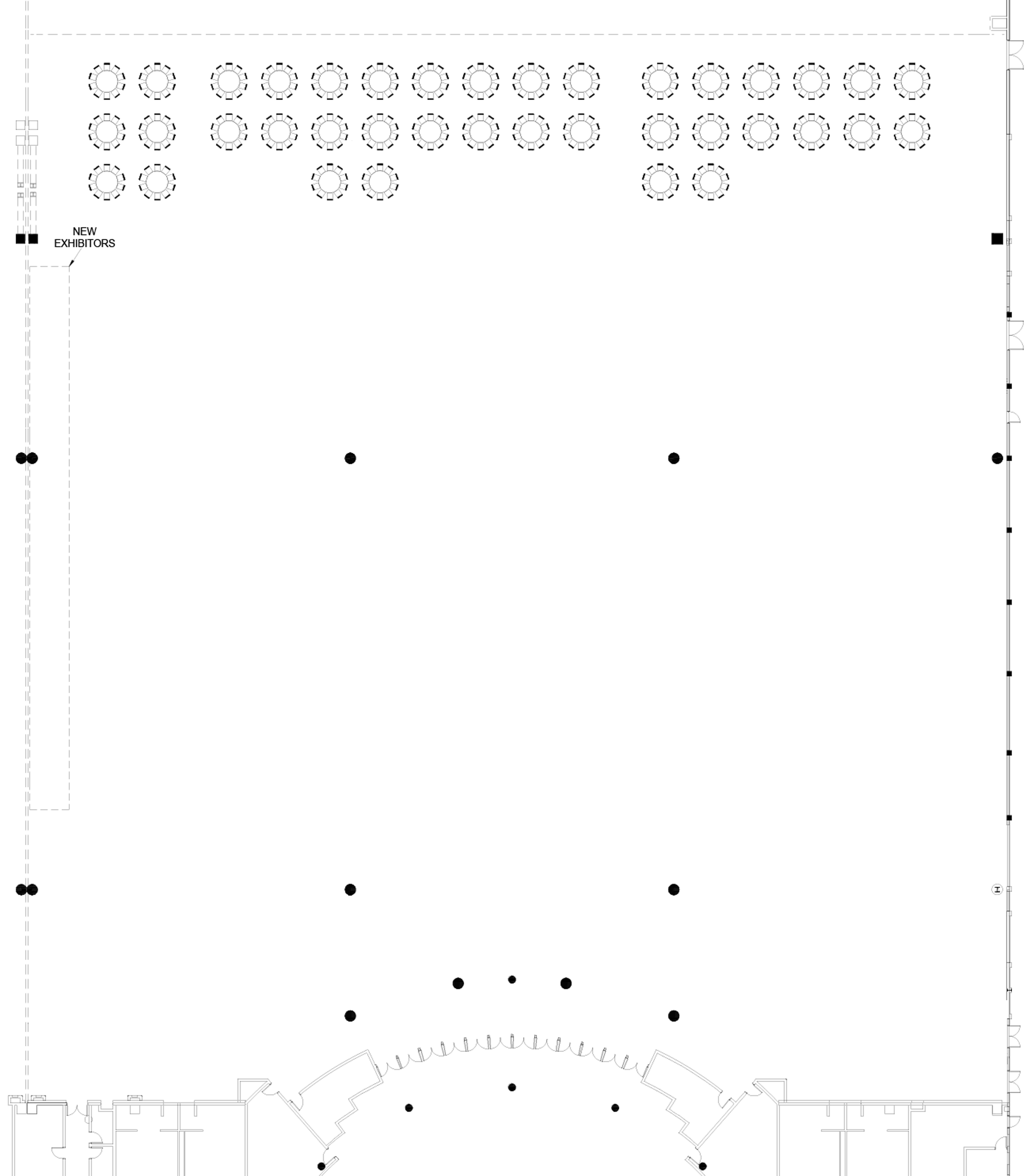

AJA Expo 2023 Floor Plan

https://www.eventscribe.com/upload/planner/floorplans/Mixed-3000_49.png

Hilltop Mansion 2715122 Rental Property Detail Page

https://i1.28hse.com/2023/02/202302231621561599399_large.jpg

https://stackoverflow.com › questions

30 36 2 Parquet files are most commonly compressed with the Snappy compression algorithm Snappy compressed files are splittable and quick to inflate Big data

https://stackoverflow.com › questions

Parquet is a Row Columnar file format well suited for querying large amounts of data in quick time As you said above writing data to Parquet from Spark is pretty easy Also

Account Recovery Rec Room Help Center

AJA Expo 2023 Floor Plan

Agenda

Sample Parquet Files FileSamplesHub

Download Woman Girl Mrs Royalty Free Stock Illustration Image Pixabay

Classic Chevron Chevron Floor Flooring Parquet

Classic Chevron Chevron Floor Flooring Parquet

One Detail That Always Adds Interest And Value To A Wood Flooring

Interior Design Of Living Room Free Stock Photo Public Domain Pictures

Interior Design Of Living Room Free Stock Photo Public Domain Pictures

Parquet Floor Room Decor - How to read a modestly sized Parquet data set into an in memory Pandas DataFrame without setting up a cluster computing infrastructure such as Hadoop or Spark This is only a